AI and the theft dilemma

I was talking to a friend about whether we should even be using AI, from a moral standpoint. Isn't the whole thing built on stolen data?

Maybe? The data used to train most big LLM models was taken from artists, news agencies, authors, and other copyrighted material.

But the laws aren't clear. What's fair use under copyright law?

I've learned from and been inspired by many authors. I'm not saying my writing is even close to those people, but I try to learn what I can. And I probably "steal" too, but it's unconscious! I don't mean to, and I try to link and reference whenever relevant.

The key differences with LLMs are scale and intent. AI systems process millions of works systematically, often without any mechanism for attribution or compensation. When I'm inspired by authors, I'm still creating something distinctly mine through my lens of experiences and perspective.

Going farther back, our society is built on the backs of people who stole land, resources, and even people. Just look at Stuff the British Stole. Some Canadians, including myself, benefit from all those stolen goods. Some people, including First Nations and my ancestors in India, lost out in this great theft.

You'd think we'd learn from that dark colonial past and not repeat those mistakes with technology like AI.

Nope. Not yet anyway.

Accountability Is Lacking

How do you help people account for what they've stolen? You have laws and you enforce those laws.

Unfortunately, we're living at a time where government is reluctant to hold people to account. Look at white-collar crime such as real estate fraud. People get away with it in Canada.

And then there’s the lack of accountability we’re witnessing in the US Government. The Administration breaks laws on purpose and sometimes are held to account and sometimes not.

Is that our society today? For people with power, it seems like it doesn't really matter if you break a law. Laws only matter if you aren't rich and can't afford to defend yourself. Super depressing, but that's the world.

Sometimes you see it live. Sam Altman, the CEO of OpenAI, had a telling reaction during a TED interview on the question of IP theft (starts at the 2:11 mark):

His mask dropped for a moment. Did you see it?

"Clap about that all you want, enjoy."

The rest of his answer is a nothing burger. He rambles about what's fair use. He knows there will be no consequences.

If Consequences Don't Matter, Then What Does?

Cory Doctorow makes the point in a recent interview:

"If you're insulated from consequences, you don't have to care...What this means is that these companies get to be too big to fail, that they become too big to jail, and then they become too big to care, because there's no consequences for hurting us."

'The Internet Sucks.' Cory Doctorow Tells How to Fix It | The Tyee

So for now I'm using AI, learning about it, building the next part of my career with it. I'm trying to avoid outright stealing by being transparent about where I'm using AI, and making sure that all my writing is always my own thoughts, ideas and examples. If anything, AI is going to keep stealing from me, but I’m making the tradeoff for faster editing.

There’s a good chance I’ll live to regret it.

I think it's a struggle for many of us to figure out how to reconcile all this.

What Can We Do?

We need laws. Laws that AI companies have to follow. We also need better privacy laws and informed citizens who understand what it means to have data privacy.

I still have conversations with people who say, "What does it matter what I share on Facebook? I'm not important. Who cares?" I think more people are starting to wake up to the reality that privacy matters. I see fewer people posting pictures of their kids.

AI is learning even more about us. I try not to share personal things with AI, but it does know my name. It’s a wild west, with little protection.

You’d hope our laws might protect us, but our laws aren't keeping up. In some cases, like bills in front of the US Senate, lawmakers are trying to prevent AI regulation for the next 10 years. The AI companies who fund the lawmakers know that laws are the only things that can keep them in check. Follow the money, and follow the data.

Personally, I don't want to be that involved with politics and learning about laws...I'd like to trust that people we vote for understand these issues and are doing the right thing. This issue is huge and complex though, with powerful interests and pressure, so as a citizen, I'm spending more time informing myself. I think this will be required for many of us soon.

Glimmers of hope?

The EU is taking steps to rein in Big Tech with more regulation. They've passed the Digital Markets Act and Digital Services Act, designed to make digital markets and services fairer, safer, and more transparent.

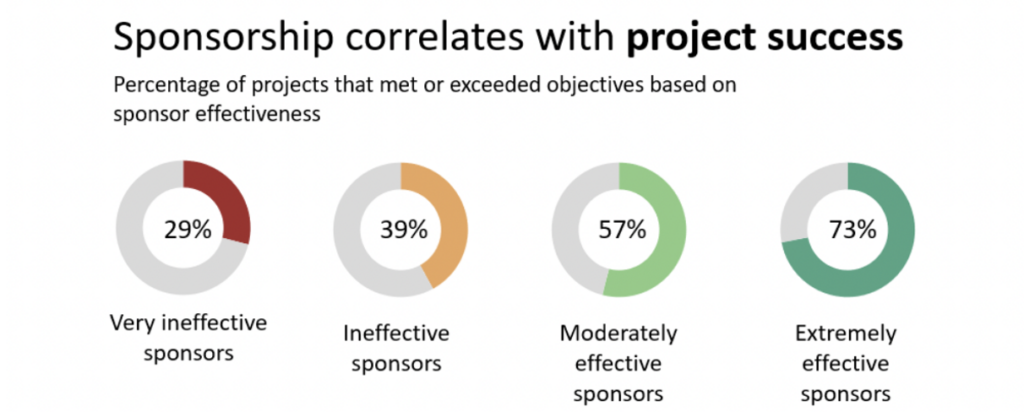

What's interesting is the enforcement piece. The EU Commission can impose fines of up to 10% of a company's total worldwide annual turnover, or up to 20% for repeated violations. That's the type of meaningful consequence that could actually change behavior and can’t be brushed off as the cost of doing business. (The Digital Markets Act: ensuring fair and open digital markets - European Commission)

Apple is appealing EU fines in court as of June 2025

The EU is essentially saying: "We don't care how big you are. These are the rules, and the penalties will hurt if you don't follow them." Big Tech is fighting back against them, but I hope the law prevails.

Canada's Opportunity

This current tariff war might be an opening to review laws like Bill C-11, passed in 2012. That's Canada's Copyright Modernization Act.

Bill C-11 includes what Doctorow calls "digital locks" provisions. These are essentially anti-circumvention rules similar to the US DMCA.

Want to make cheaper printer ink or create a competing app store? You really can't. This law stops you from building products or services that work around digital restrictions.

The "digital locks" provision means that even if you have a legal right to do something (like make a backup copy), if there's a technological barrier, circumventing it becomes illegal. This is regardless of whether your underlying purpose was legitimate.

As Doctorow explains,

"…if a manufacturer uses computer code to control what you do with the things you buy, you can't overrule them." The Globe and Mail.

Companies can essentially create their own mini-copyright regimes through technology, and the law backs them up by making it illegal to work around those restrictions, even for lawful purposes.

Canada could, as part of this trade fight, consider unwinding this law. How would Big Tech react then?

This is not directly an AI law, but I’m suggesting here that new laws are needed, or ones like these enhanced, so Canadian companies and individuals can compete and have IP protection where it makes sense.

There is hope (Pluralistic):

"If enshittification isn't the result of a new kind of evil person, or the great forces of history bearing down on the moment to turn everything to shit, but rather the result of specific policy choices, then we can reverse those policies, make better ones and emerge from the enshittocene."

Back to the Original Question

So back to my original question: Should we be using AI built on stolen data? I still don't have a clean answer. But I know this: until we have laws with real enforcement (the kind the EU is trying, the kind Canada could try), we're just accepting that theft is fine as long as you're big enough to get away with it.

I can't ignore AI. It's part of my career now, paying for the food I eat. So I want to find ways to use it that feel less morally compromised. Maybe that means supporting more open AI models when they exist. Maybe it means paying living artists and writers for anything I benefit from their work on. Maybe it means advocating for licensing deals that actually compensate creators.

But do the AI companies want to do that? The Sam Altman "clap all you want, enjoy" moment suggests they're not particularly interested in changing the system that got them here. Why would they be? The current approach is working great for them.

For now, I'm using these tools while pushing for better rules. But every time I ask Claude or ChatGPT for help, I'm reminded: this convenience comes at someone else's expense.

AI tools used: Claude for editing grammar and flow