AI and the case for cautious adoption

I was inspired by this developer blog post about AI skeptics, and I wanted to explore similar ground from my own perspective.

I have friends, clients, and people in my life who resist or are skeptical of AI. I totally get it—sometimes I resist it too. But I'm also concerned about ignoring something that is speeding out of the train station and not slowing down.

Source: Unsplash

What about our preparedness? You can't understand what's coming without using it, right? As I've learned, ignoring something generally makes a problem worse later.

Then there are others who use AI daily, multiple times a day...almost to the point where you can't imagine life before it.

I'm somewhere in between. I'm cautiously using it, and being as intentional as I can about where I bring AI into my life.

I've been building websites since the 90s (Geocities, RIP!), so I'm naturally curious about new tech. AI is the second biggest change I've seen—the first was the internet. I know my work will look completely different in 3-5 years, so I feel I have no choice but to get on this train.

But I know not everyone is built like me. So this is my attempt at a conversation—not to convince you to join the dark side, but to explore the nuance. Because like most complicated things, AI isn't black and white.

Jump to:

But...it's theft

AI is built on theft, as I wrote about recently. If your moral argument against using AI is because it's built on stealing, I get it. AI companies scraped billions of copyrighted works without permission or compensation including books, articles, artwork, code, music. That should be illegal, and in some cases, prosecution is being pursued in the courts by publishers, the New York Times, and others.

Artists need to be compensated fairly for their work. This has been a problem for musicians for decades. From Napster to iTunes to Spotify, their pay keeps getting eroded. Now it's happening to writers, visual artists, film makers, animators, and more.

But here's where it gets complicated. Some AI companies are starting to adapt. OpenAI has licensing deals with publishers. Adobe is training AI only on licensed content. Canva has a fund to pay creators who allow their content be used to train AI models. There are emerging AI tools built entirely on opt-in, compensated datasets.

The cat's out of the bag on the models trained on scraped data, but the future doesn't have to look the same. I hope it becomes the norm for artists to be paid for licensing their work to AI tools, just like they get paid by Getty Images or Random House.

The question becomes: if there were AI tools built ethically, with proper compensation and consent, would that change the moral calculus? Or is the current foundation so tainted that we can't move forward?

I don't have a perfect answer. But I do think supporting companies that are trying to do this right, while pressuring lawmakers to protect creators, is more productive than a blanket boycott.

Lawmakers, are you listening?

But...the jobs

Yes, the jobs.

Talked to any weavers, lamplighters, blacksmiths, manual typesetters, or typist pools lately? "Computer" was also a job back in the day, if you remember the movie Hidden Figures. Jobs keep changing, generation after generation.

What's happening now is that the job market is changing FAST. The AI evangelists want us to believe that AI is going to replace all knowledge workers overnight, but I don't buy it. Not yet, anyway.

I mean, we've had this AI thing going for 3 years now, and how much has GDP or productivity actually improved? If it's really so transformative, shouldn't we be producing more than ever? The productivity gains we keep hearing about aren't showing up in measures that matter to a healthy society and economy.

But here's what I see happening: AI is becoming really good at handling routine knowledge work. The kind of tasks that used to take hours can now be done in minutes. Meeting minutes, basic analysis, research summaries, focused coding tasks. Not the complex, creative, strategic thinking necessarily, but the scaffolding around it.

This means the nature of knowledge work is shifting. Less time on the grunt work, more time on the judgment calls, the creative problem-solving, the human relationship stuff that still matters.

My job title, what I do, how I work, the tools I use—all of that will change. But avoiding AI isn't going to preserve the jobs of 2020. It's just going to make adapting to the jobs of 2030 harder.

There's truth in that saying:

"You won't be replaced by AI, but you will be replaced by someone who knows how to use AI."

But...the climate

Headlines warn that AI will use more energy than small countries. The IEA estimates that electricity consumption from data centers, AI, and cryptocurrency could double by 2026. That's roughly equivalent to Japan's entire electricity consumption.

That sounds alarming, and it is. But context matters.

Climate change is a huge problem, but focusing on AI energy use is like watching the goldfish while missing the sharks. EVs, heat pumps, air conditioning, new factories, industrial processes—these are driving much larger increases in electricity demand. And governments worldwide are pursuing these aggressively because they're essential for decarbonization and economic growth.

Plus, much of the growing electricity demand is coming from places like China and India, where billions of people are rightfully seeking the quality of life we've enjoyed in the West. Per capita electricity use in Canada, the US and the EU is actually declining.

Here's the uncomfortable truth: if you've taken a plane ride in the past couple years, that single flight probably has a bigger climate impact than all your AI use combined. If you drive a gas car, heat your home, eat meat regularly, or buy stuff shipped from overseas, those are much bigger climate factors than asking Copilot to help with your emails.

I'm not saying AI's energy use doesn't matter, it does. Tech companies should be investing in renewable energy and efficient data centers (and many are). But if climate is your primary concern with AI, there are probably a dozen bigger changes you could make in your daily life first.

The climate crisis won't be solved or lost based on AI adoption. It'll be won or lost on energy policy, industrial transformation, and the choices we make on how to power our entire civilization.

But...it lies

Yes, it hallucinates. Sometimes to hilarious effect, and sometimes with serious consequences.

AI doesn't understand what's true or false, what actually happened or what's made up. It makes educated guesses based on patterns in its training data, but it doesn't actually know anything.

As Science News Today explains:

They are trained to predict the next word or token in a sentence based on statistical patterns in vast amounts of text data...The model isn’t trying to lie; it’s simply guessing what the next part of the response should be, and sometimes, that guess is wrong.

What's particularly unsettling is how confident AI can sound when it's completely wrong. It'll double down on mistakes with the same authoritative tone it uses for correct information. It's getting better over time—I suspect because they are programmed now to say "oops, you're right!" when the error is pointed out.

This is fundamentally different from previous computing tools. When Excel does math, it's always right (assuming you entered the formula correctly). When GPS calculates a route, it's working with real map data. We're used to computers being precise and literal.

On the flip side, AI is a creative thing. AI is more like having a conversation with someone who's read everything but has a terrible memory and sometimes confuses fiction with reality. It's incredibly creative and can surprise you with insights you'd never considered, but it might also confidently tell you that sharks are mammals.

In my work, I've noticed the worst hallucinations happen at two extremes:

- Vague, open-ended questions where AI fills in gaps with plausible-sounding nonsense

- Very specific, technical questions about rapidly changing topics where AI gives outdated advice

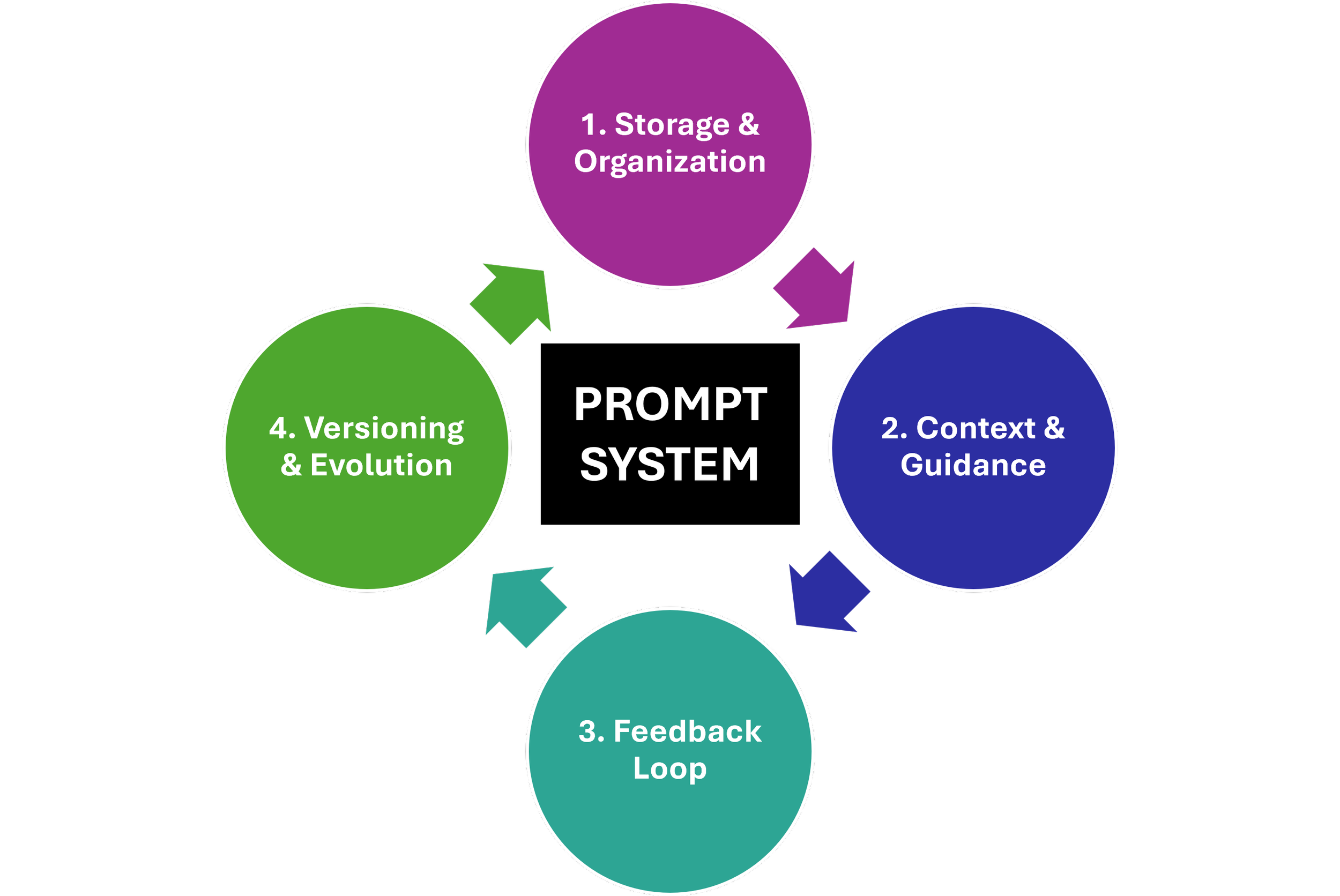

If you use AI to brainstorm or understand something, expect to engage back and forth. Work through a topic, or problem solve using structured prompts - like these I compiled.

And of course, always verify anything important like research or stats.

Same rule applies to Wikipedia, Google results, or any other source: check your facts, especially for work that matters.

For me, the creativity is worth the occasional nonsense, but only if you stay skeptical.

My advice is to keep questions and prompts as specific as you can, but for very detailed work, you need to find other sources of information. And cross-check things with trusted websites and experts.

but...it's dangerous

The concerns are real. AI can be manipulative. There are already reports of people developing unhealthy emotional attachments to chatbots, or AI being used to create increasingly sophisticated scams and misinformation. In therapy circles, there's growing worry about AI addiction and psychological manipulation.

But here's the thing: we live in a world full of designed-to-be-addictive products. Social media algorithms, gaming, even our phones are engineered to capture our attention and keep us hooked. Sugar, tobacco, alcohol, processed food, caffeine—the list goes on. The solution isn't to avoid everything potentially harmful, but to approach it with awareness and moderation.

We don't stop driving because cars are dangerous. We wear seatbelts, follow traffic laws, and teach our kids to look both ways. We don't stop using the internet because it's full of misinformation, we learn to verify sources and think critically.

The same applies to AI. Don't believe everything it tells you. Don't get drawn into deep emotional relationships with it. Don't use it for manipulation or harm. Treat it like any other powerful tool—with respect and caution.

And yes, regulators need to step up, especially when it comes to protecting kids. But while we're waiting for that, we can start by being smarter users ourselves.

The risk isn't in the technology existing, it's in using it blindly.

But...it's making us dumb

Technology is definitely changing us, and MIT recently raised alarm bells about how generative AI is affecting our brain patterns. The study looked at essay writing across three groups:

People using AI

People using a search engine

People only using their own brain

The group using only their brain showed the strongest brain activity and better memory recall, followed by search engine users, with AI users in last place.

That's concerning. I want strong brain activity, neurons firing, connections being made.

I feel my brain changing with all the tech in my life. I feel it when I mindlessly scroll on my phone to "take a break," zone out in front of the TV, or can't stand even 5 minutes of silent meditation. I also can't recall phone numbers anymore, not like I used in the days before I had a cell phone.

But really, we've been outsourcing mental tasks to technology for centuries. Socrates complained that writing destroying memory because students have less incentive to memorize things. We stopped doing complicated math in our heads when we got slide rules and then calculators. GPS replaced our sense of direction. Each time, we worried we were getting dumber, but we were actually freeing up mental space for...more complex thinking? Or maybe just YouTube.

The question isn't whether AI will change how we think—it will. The question is whether we can be intentional about what we delegate and what we keep doing ourselves.

I still write first drafts by hand in my notebook, away from my phone and internet. It's painful at first, but 30 minutes in, I'm motivated to keep going. If I started on my computer, I'd be distracted within a few sentences.

I still read paper books because they are the best technology ever invented.

So yes, keep fighting to use that grey matter. We don't want to end up like the humans in Wall-E. But maybe the goal isn't to avoid AI entirely—it's to be deliberate about when we use our brains and when we let the machines help.

But...my data

AI companies are definitely building detailed profiles of their users. Every conversation with ChatGPT, Claude, or others becomes part of your digital footprint. They're learning not just what you ask, but how you think, what you struggle with, what you're curious about.

I follow a simple rule: don't share anything with AI that you wouldn't want leaked or sold later. I avoid using it for therapy, health questions, financial planning, or sensitive work details. If OpenAI or Anthropic needs to monetize their data differently, I don't want my conversations ending up in the hands of insurance companies, banks, or employers.

But let's be honest—we've been riding this data collection train for years. Facebook knows your social connections and political leanings. Google reads your emails and knows your search history. Amazon tracks your purchases and listens through Alexa. Your mobile provider knows where you go. Your credit card company knows what you buy and where. My grocery chain knows all about my sugar cravings.

AI is just the latest addition to an already invasive digital ecosystem. That doesn't make it right, but singling out AI while ignoring the rest feels inconsistent.

The real issue is that we're creating these detailed profiles without meaningful consent or control. Most of us click "agree" on terms of service without reading them. We need better data protection laws, but we also need to be more intentional about what we share and with whom.

The privacy conversation shouldn't just be about AI, it should be about reclaiming some control over our digital lives entirely.

So how do you engage thoughtfully?

So I'm on this AI train. A little skeptical, sitting in the back, but I'm on this train. I'm curious about how this technology is changing how we work, make decisions, and maybe even how we think. The question isn't whether to engage, but how to do it thoughtfully.

Start small and specific. Don't jump straight into having AI write your important emails or reports. Try it for brainstorming a fun idea, editing a draft, or explaining concepts you're trying to understand. Get a feel for where it's helpful and where it falls short. Read my article on writing better with AI.

Always verify. Treat AI output like you would a junior colleague's first draft—it might be brilliant, but it needs your review. Check facts, sources, and logic. I've caught AI making up citations, getting dates wrong, and confidently stating things that aren't true.

Set boundaries. I don't put sensitive personal info, health information, client details, financial information, or my deepest, darkest thoughts into AI tools. Same rule I follow with any cloud service—if it getting leaked would be a problem, don't put it there.

Use it as a thinking partner, not a replacement. AI is great for "what if" scenarios, helping you think through problems from different angles, or getting unstuck when you're staring at a blank page. But the final decisions, the strategy, the human judgment—that's still yours. Read this to learn more about adding context to prompts for better results.

Stay curious about the limitations. The more you use it, the more you'll notice patterns in where it struggles. That awareness makes you a better user and helps you avoid the pitfalls.

You don't have to become an AI power user overnight. But understanding what it can and can't do? That feels essential in 2025.